Motion tracking is the process of recording the change in movement of objects and people, capturing their position change, velocity, and acceleration. This system has applications in various fields such as filmmaking, video production, animation, sports analysis, robotics, and augmented reality. Video games use motion tracking to animate characters in games like baseball, basketball, or football. Movies use motion tracing for effects for CGI (Computer-generated Imagery).

In sports, professionals implement motion tracking for biomechanics analysis. This allows them to study movement patterns and performance metrics and to identify and improve the biomechanical stats of athletes. The concept of motion tracking has been in existence for decades. Before the deep learning era, mechanical systems (these devices used rotating disks to record motion sequences) and manual methods tracked motion (where each object in each frame was traced by hand). Before we dive into motion tracking, let’s briefly look at the methods used in the past, and how they evolved.

About us: Viso Suite is our end-to-end computer vision infrastructure for enterprises providing a single location to develop, deploy, manage, and secure the application development process, Viso Suite is scalable, flexible, and can boost productivity while lowering operation costs. Book a demo with our team of experts to learn more.

History of Motion Tracking

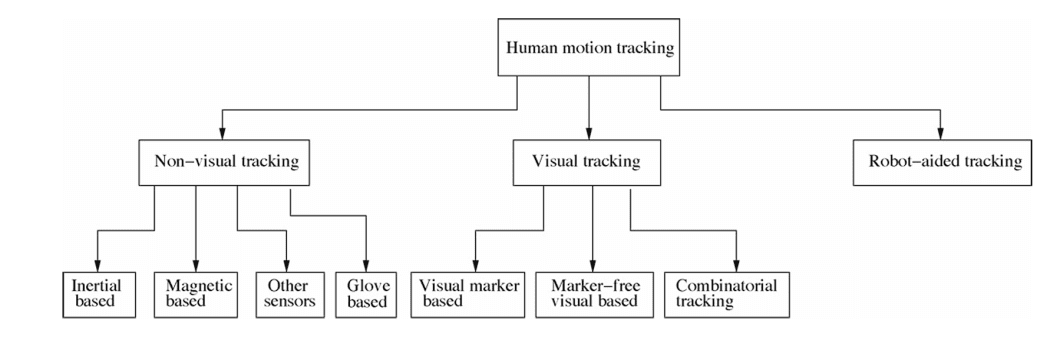

Motion Tracking can be roughly divided into four:

- Manual Tracking: Manually identifying points of interest in images, however, was a time-consuming and laborious process that did not require markers but had limitations in accuracy and usability.

- Non-Visual Tracking: Uses various sensors attached to the body to capture the motion.

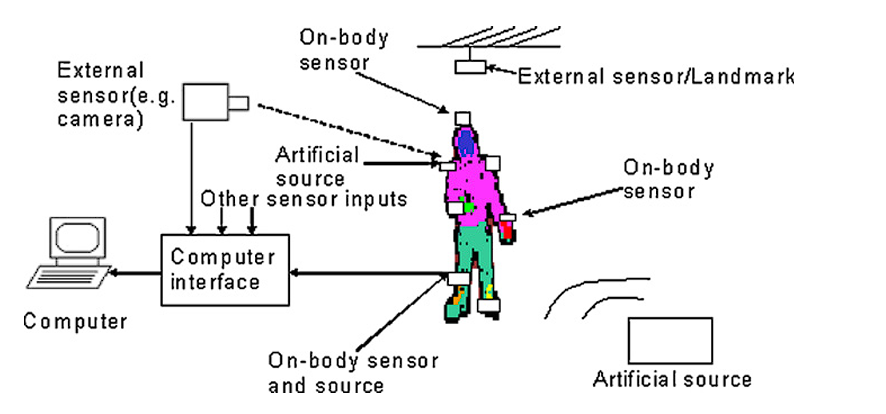

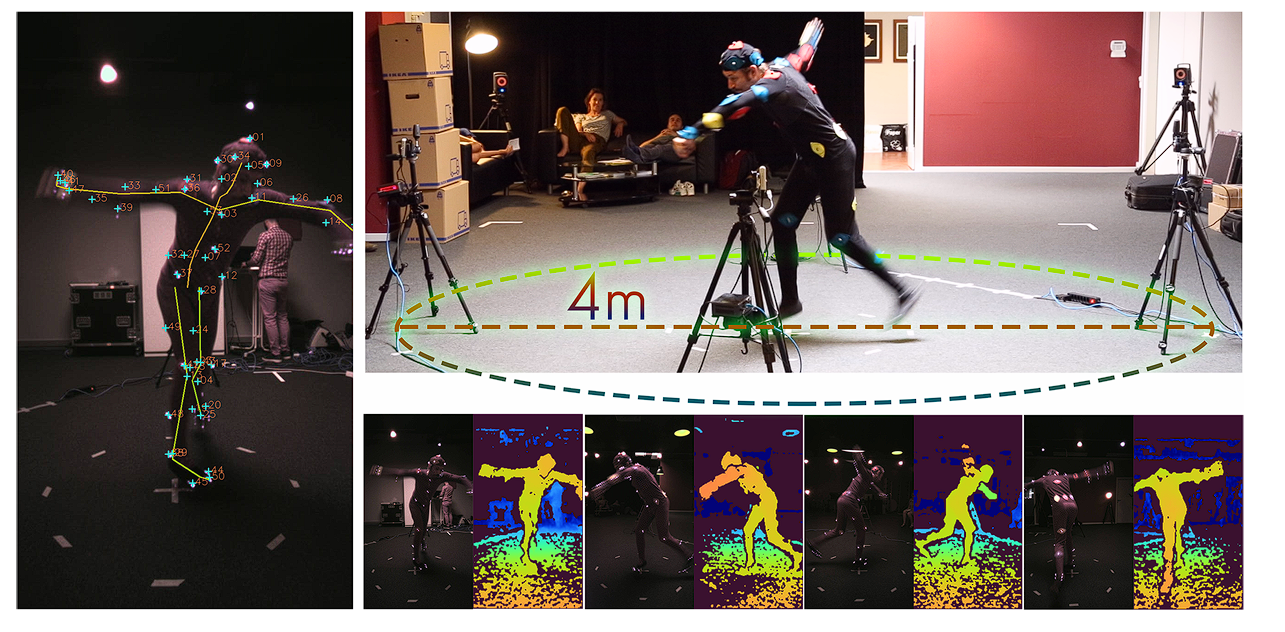

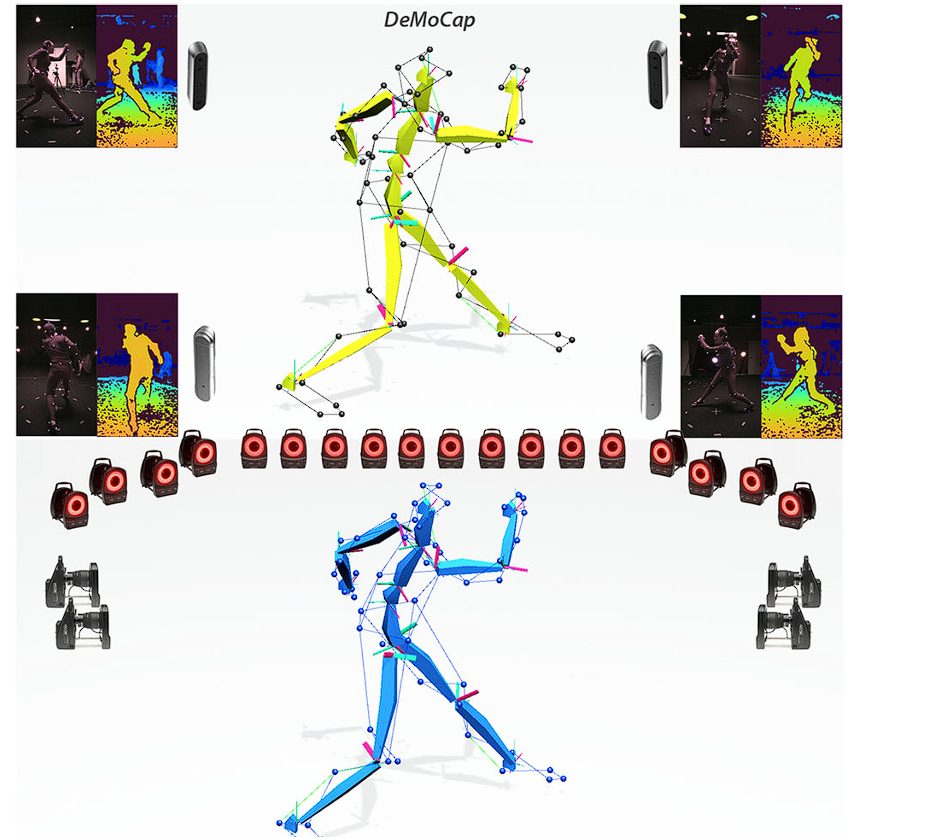

Sensor-based tracking system –source - Marker-Based Systems: Marker-based systems use physical markers attached to the subject’s body or object. Multiple cameras track these markers and capture their positions in 3D space. The data collected is then processed to create a detailed and precise model of the subject’s movement.

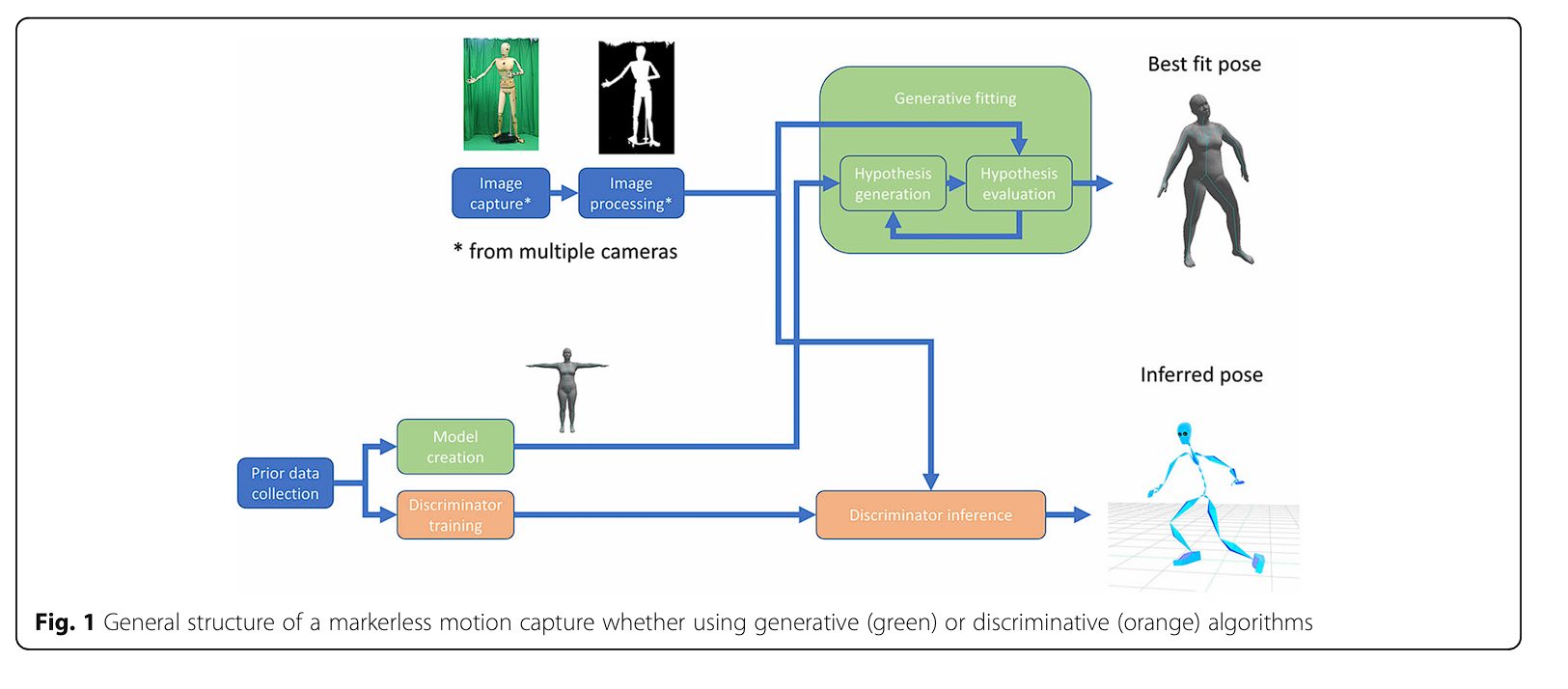

- Marker-less Systems: Currently, modern systems use computer vision and deep learning to track motion without markers. Deep learning models use neural networks to analyze motion from video data. These models are trained on large datasets to recognize and predict movement patterns without the need for physical markers.

Basics of Motion Tracking

Overall, motion tracking follows the following process.

For Marker-Based Tracking

- Marker Placement: In marker-based tracking, visual markers are placed in the scene or on the objects of interest ( for example on a human). These markers are high-contrast patterns, fiducial markers, or physical objects with known geometries that are easy to detect using cameras.

- Detection and Recognition: The tracking system detects these markers in each video frame and recognizes them.

- Tracking Motion: Once the markers are detected, the markers’ positions are tracked over time by following their movement from frame to frame. The relative motion between markers is what provides information about the movement of objects.

- Pose Estimation: By using the positions of multiple markers, the system can estimate the 3D pose (position and orientation) of the tracked objects or the camera.

Marker-less Tracking

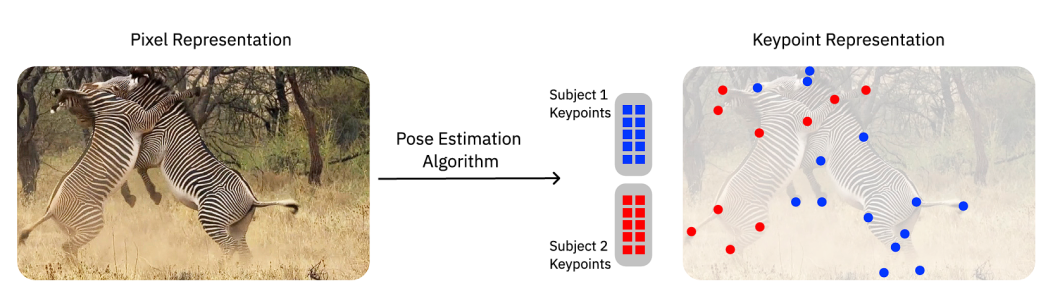

- Feature Extraction: Marker-less tracking uses deep learning models to extract features such as corners, edges, textures, or track points (such as joints in humans). These features serve as reference points for tracking just like a marker.

- Feature Matching: Similar to marker-based tracking, the system matches these features between consecutive frames to analyze the movement of the marker and track its motion over time.

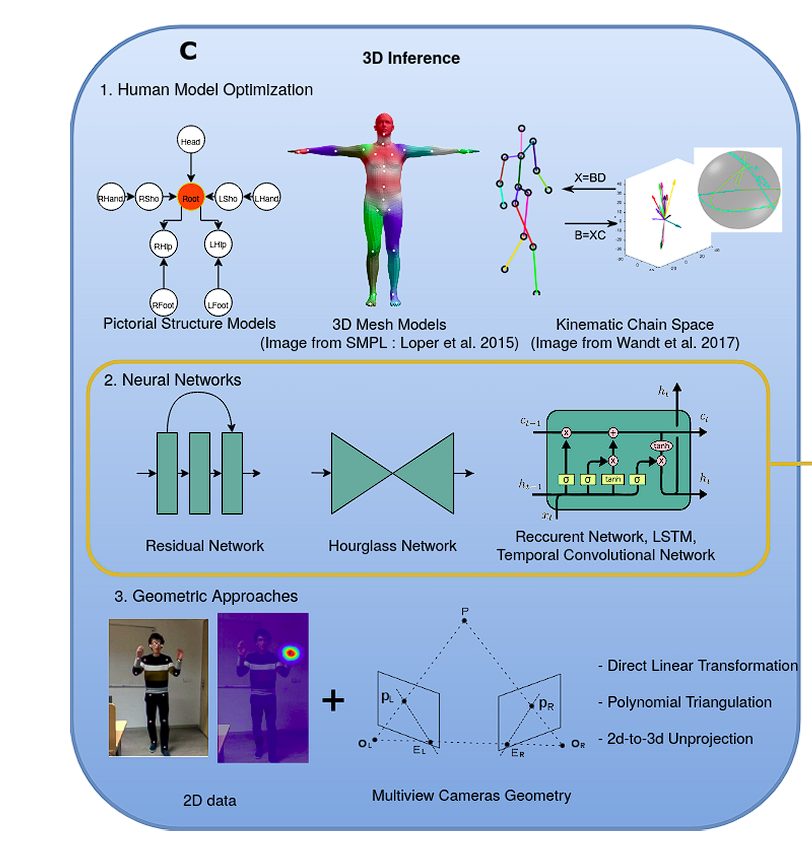

- Motion Estimation: Various algorithms, such as optical flow or structure-from-motion (SfM), are used for motion estimation and tracking.

- Depth Estimation: Moreover, techniques such as stereo vision or depth sensors, are employed to estimate the depth information of the scene for 3D motion tracking without markers.

Marker-less tracking is used in scenarios where placing markers is impossible or not efficient, such as in sports analysis, surveillance, or robotics. This system allows more flexible tracking, and the ability to perform in diverse environments.

Key Terms in Motion Tracking

- Motion Vectors: Motion vectors are mathematical representations to represent object movement, indicating the direction and magnitude of the movements.

- Key points: These are specific and trackable points in an image for tracking.

- Markers:

- Passive Markers: Reflective markers that bounce infrared light back to the cameras.

- Active Markers: LEDs that emit light.

- Skeleton: Digital representation of the person’s body structure. It consists of interconnected joints and segments that create a human skeletal system.

- Inverse Kinematics (IK): Used to calculate the joint angles needed to place a part of the skeleton (e.g., a hand) in a desired position.

- Motion Capture Suit: A suit fitted with multiple markers and sensors to capture the movement of a person wearing that suit.

Techniques and Algorithms Used in Motion Tracking

Optical Flow

Optical flow is a Computer Vision (CV) method that calculates the motion of objects between consecutive frames. It works by analyzing the motion of pixels between frames. There are several methods for calculating optical flow.

- Lucas-Kanade Method: A popular optical flow developed by Bruce D. Lucas and Takeo Kanade in the 1980s, and ever since became one of the foundational techniques in computer vision.

- Horn-Schunck Method: Uses a global approach to estimate optical flow by minimizing an energy function. It provides dense motion vectors but is computationally intensive.

Feature-Based Tracking

Feature-based tracking involves detecting and tracking distinctive features (key points) in an image. These features are matched across frames to estimate motion.

- SIFT (Scale-Invariant Feature Transform): Detects and describes local features in an image. It is tolerant to changes in scale, rotation, and illumination.

- SURF (Speeded-Up Robust Features): Similar to SIFT but faster and more efficient. It uses integral images and a fast Hessian matrix-based detector to identify key points.

Background Subtraction

A technique to detect moving objects in a video sequence by comparing each frame to a reference background model. The difference between the current frame and the background model highlights the moving objects.

The process starts by creating a background model that represents the stationary objects. In the following frames of the video, the current frame is compared to the background model to identify pixels or regions that have changed significantly. These indicate motion in the scene.

- Gaussian Mixture Model (GMM): A statistical approach that models the background as a mixture of Gaussian distributions. It can adapt to changes in the background over time.

- Running Average: Maintains a running average of the background and updates it with each new frame. It is simple and effective for static backgrounds.

Deep Learning for Motion Tracking

The integration of computer vision and deep learning for motion tracking has resulted in marker-less methods. Moreover, deep learning techniques use large datasets for training and thus have the ability to perform in a diverse environment where traditional motion tracking fails.

Feature Extraction with Deep Learning

Convolutional Neural Networks (CNNs) can be used to extract features such as edges, corners, and textures from images or video frames. Moreover, pre-trained CNN models (e.g., VGG, ResNet, or MobileNet) can be then fine-tuned on motion-tracking-specific datasets.

Feature Matching and Estimation

Models such as Siamese networks or correlation filters are used for feature matching across frames for key points and regions of interest.

These methods work by learning to identify similarities between features extracted from different frames, and as a result, are robust at estimation even in challenging conditions such as occlusions or changes in viewpoint.

Object Detection and Tracking

YOLO, SSD, and Faster R-CNN can detect and localize objects of interest in each frame. Once objects are detected, deep learning-based trackers (e.g., SORT, DeepSORT) are used to track them across frames, while handling occlusions and appearance changes.

Optical Flow Estimation

Models such as FlowNet or PWC-Net directly estimate dense optical flow fields from image sequences. These models learn to predict the motion of pixels or feature points between consecutive frames and provide dense motion information, which can be used in place of traditional optical flow estimation methods.

RNN and LSTM Networks for Temporal Tracking

Recurrent Neural Networks (RNNs) and their variants such as Long Short-Term Memory (LSTM) networks are capable of sequential motion prediction. These models can predict the future positions of objects based on their past movements, by maintaining a memory of previous frames.

Moreover, LSTM and RNNs are used to capture temporal dependencies for action recognition. The CNN extracts spatial features from each frame, while the LSTM processes these features over time to recognize complex actions and movements.

GANs for Generating and Predicting Motion

Autoencoders and Generative Adversarial Networks (GANs) are powerful tools for generating and predicting motion patterns, as they can be used to generate realistic motion sequences, predict future frames, and fill in missing frames in a video sequence.

Specific Models such as VideoGAN and MotionGAN are designed for these tasks.

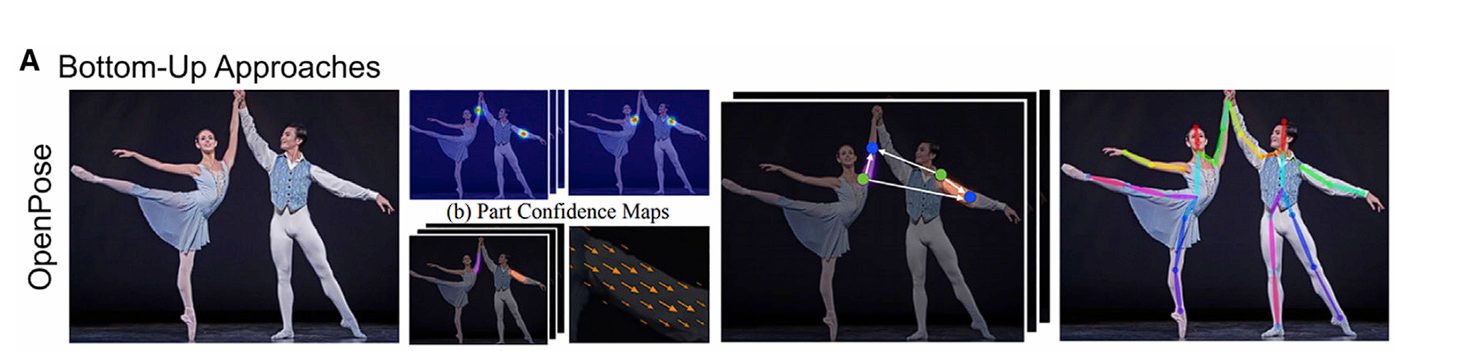

OpenPose

OpenPose is a state-of-the-art real-time multi-person keypoint detection library. It can detect 135 key points in the human body such as the hand, foot, elbow, and more.

Organizations across industry lines use motion tracking. E.g. in healthcare for posture analysis, in sports for performance tracking, and in entertainment for motion capture and animation.

Advantages:

- High accuracy in detecting human key points.

- Ability to handle multiple people in the same frame.

- Open source.

Challenges incurred in Motion Tracking

Motion tracking faces a variety of obstacles, some of them are:

Handling Occlusions and Complex Backgrounds

- Occlusions: One of the most significant challenges in motion tracking is dealing with occlusions, where objects are partially or fully obscured by other objects. This can lead to loss of tracking and inaccuracies in motion estimation.

- Complex Backgrounds: Environments with dynamic and cluttered backgrounds can confuse motion-tracking algorithms, making it difficult to distinguish between the moving object and the background.

Deep learning models are better at handling these problems in comparison to other methods of motion tracking.

Robustness to Variations in Lighting and Environment

- Lighting Conditions: Changes in lighting, such as shadows, reflections, and varying illumination, affect the accuracy of motion-tracking algorithms.

- Environmental Factors: Weather conditions, such as rain, fog, and snow impact the performance of motion tracker systems and pose a danger in outdoor applications like autonomous driving.

Implementing Motion Tracking

In this blog, we looked at tracking the movement and motion of objects and people accurately using Motion tracking, and how it provides invaluable insights and capabilities in various fields, from enhancing security and healthcare to revolutionizing sports analytics and virtual reality experiences.

Motion tracking can be divided into two techniques based on whether it uses markers or not. Techniques such as optical flow, feature-based tracking (e.g., SIFT, SURF), and background subtraction are some of the examples of markerless techniques. These are further automated and enhanced using deep learning models such as YOLO, and OpenPose.

Whereas marker-less techniques use infrared cameras in a controlled environment to capture the precise movement of actors or objects. We have seen this in film, animation, and biomechanics.

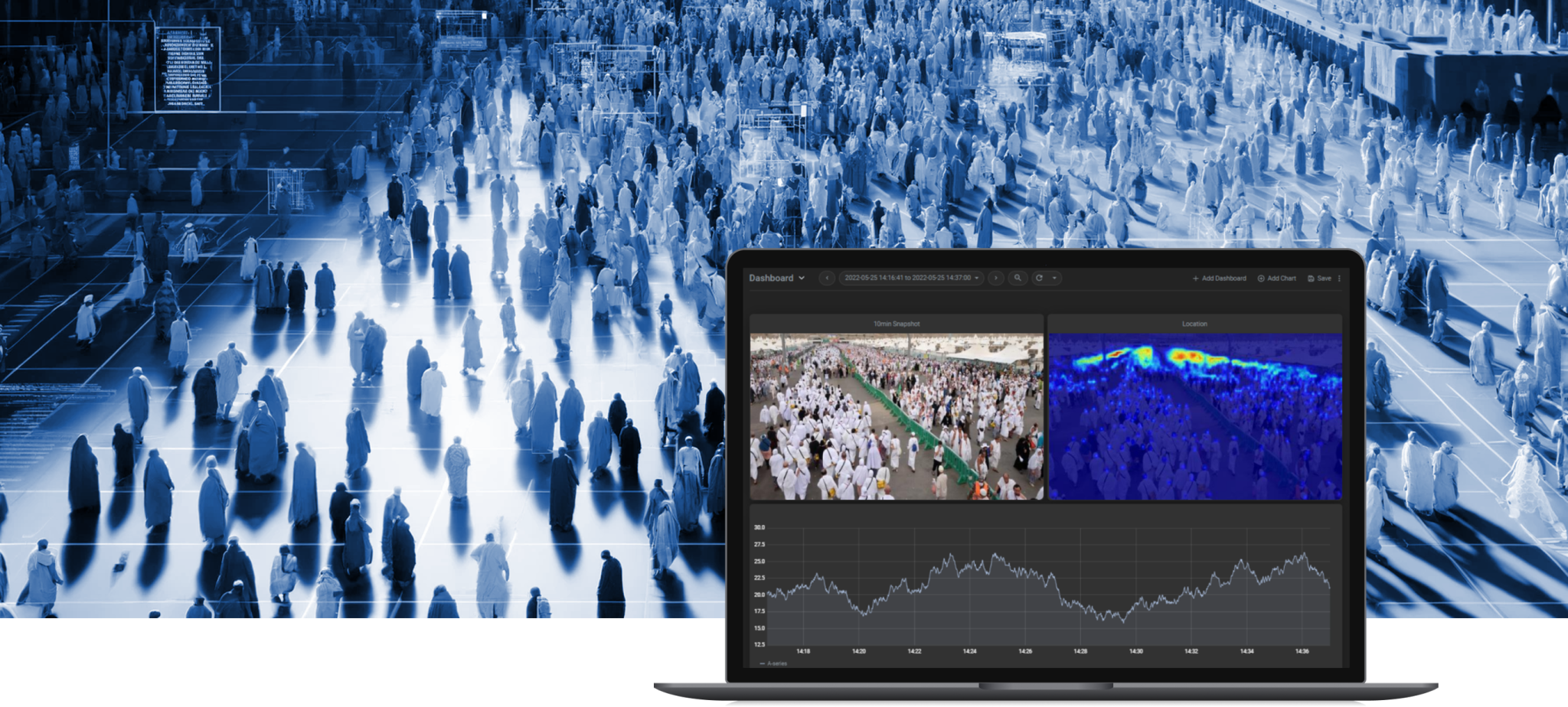

Real-World Computer Vision

Viso Suite allows companies to integrate computer vision tasks, like motion tracking, into existing workflows and tech stacks. By consolidating the entire ML pipeline, teams can manage their smart operations in a single interface. Thus, eliminating the need for point solutions. Find out more about Viso Suite by booking a demo with our team of experts.

Learn More About Computer Vision

Read more of our interesting blogs below: